Have you ever wondered what it would be like to have a conversation with your younger or older self? Thanks to Runway’s latest tool, Act-One, that whimsical idea is now closer to reality than ever before. When I first heard about Act-One, a new feature in Runway’s Gen-3 Alpha models, my head was brimming with ideas. The potential for creativity was immense, and I knew I had to dive in.

Act-One allows you to animate a character image by uploading a driving performance video, influencing expressions and mouth movements with remarkable precision. The idea of bringing still images to life fascinated me, and I decided to put this technology to the test by creating a video where my 10-year-old self meets my 75-year-old self.

Here’s the final creation:

In this blog post, I’ll walk you through the steps I took to create this heartwarming and thought-provoking video, highlighting both the technical and human elements of the journey.

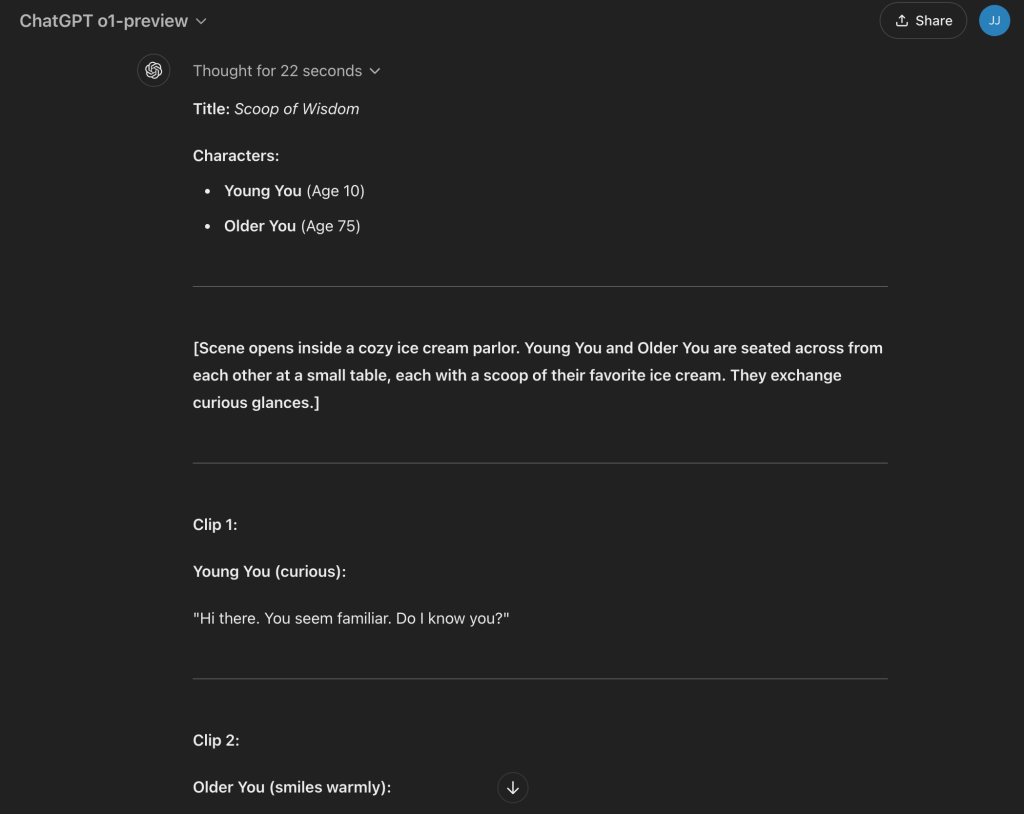

Step 1: Crafting the Concept and Script using ChatGPT

The first challenge was to come up with an idea that would showcase the power of Act-One while resonating on a personal level. I turned to ChatGPT, specifically the o1-preview version, for a brainstorming session. After discussing several possibilities, the concept of my younger self interviewing my older self stood out. It was perfect—a blend of personal reflection and technological innovation.

We collaborated on a script that was both touching and infused with humor. The dialogue touched on universal themes like the importance of relationships over money, finding passion in work that contributes positively to the world, and learning to care less about others’ opinions.

Step 2: Recording the Performances on My Mobile Phone

With the script in hand, I moved on to recording the performances for each scene. Simplicity was key here. I used my mobile phone to record videos of myself acting out both the younger and older versions. For the younger self, I tried to embody the curiosity and energy of a 10-year-old, while for the older self, I adopted a more reflective and measured demeanor.

To adhere to Act-One’s best practices, I ensured the following:

- Well-lit environment: Proper lighting to capture clear facial features.

- Framing: Positioned myself from the shoulders up, facing forward.

- Minimal movement: Kept body movement to a minimum to focus on facial expressions.

- Consistent framing: Made sure my face stayed within the frame throughout each video. Using portrait mode on the phone didn’t work as well, and I kept getting the error below…I’d recommend shooting in horizontal mode and avoiding too much body movement.

Step 3: Creating the Avatars of My Younger and Older Selves

Next came the visual transformation. I needed images of my 10-year-old and 75-year-old selves to serve as character references. I revisited a guide I had shared earlier on creating AI avatars using a LoRA (Low-Rank Adaptation) model. The guide, Unlock Your Digital Twin, was instrumental in helping me generate accurate and personalized images.

For those without a custom LoRA, tools like Midjourney can also produce character images using reference photos, though the results might be less precise. The key considerations for the character images were:

- Clarity: Well-lit images with defined facial features.

- Framing: Single face framed from the shoulders up, facing forward.

- Compliance: Adherence to Runway’s Trust & Safety standards.

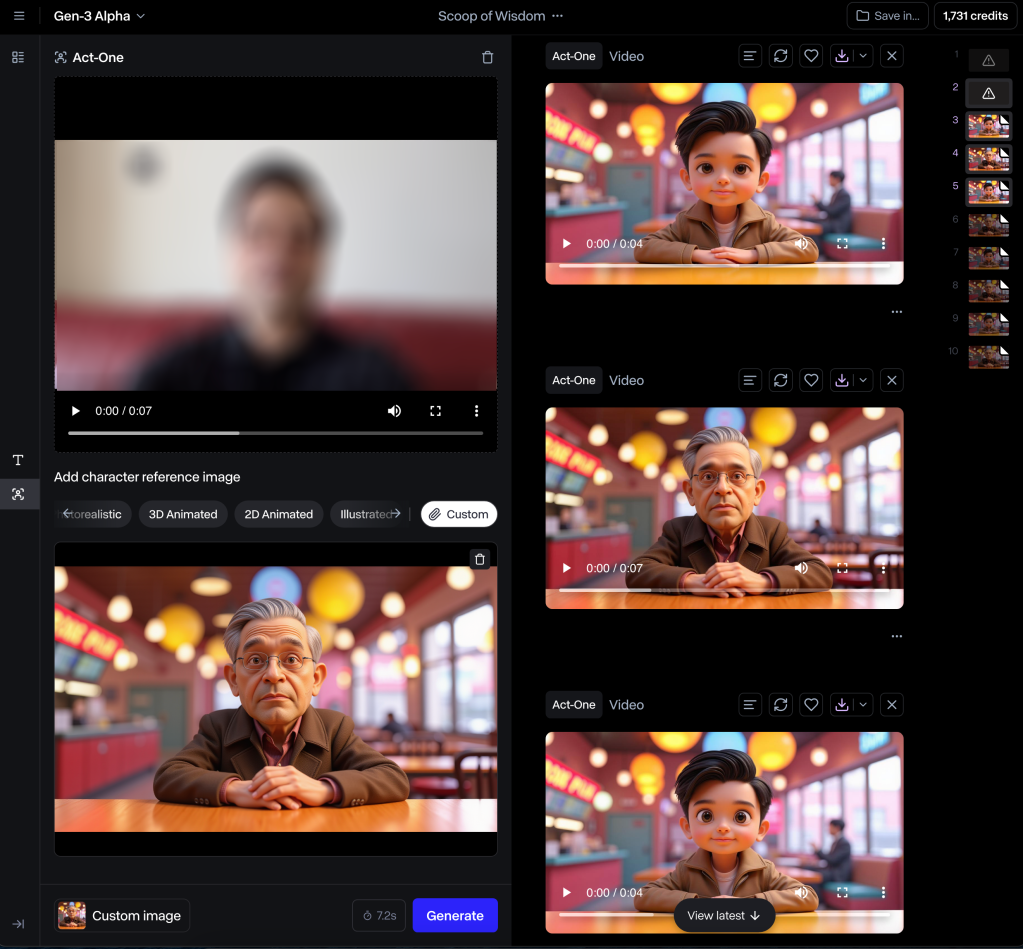

Step 4: Animating with Runway’s Act-One

Now for the exciting part—bringing it all together using Runway’s Act-One tool. Here’s how I did it:

- Uploading the Driving Performance: I uploaded the videos from my phone as the driving performances. Initially, I tried using portrait videos but encountered an error stating, “We detected too much movement from your video.” Switching to landscape mode with minimal movement resolved this issue.

- Selecting the Character Images: I uploaded the avatars of my younger and older selves as the character references.

- Generating the Output: Act-One charges about 10 credits per second with a 50-credit minimum. For my video, this was a reasonable investment. The tool processed the inputs and generated animated videos that preserved the details of the original images while matching my expressions impressively well.

Best Practices Reminder:

- Driving Performance:

- Well-lit, forward-facing videos.

- Minimal movement and consistent framing.

- Clear facial expressions without occlusions.

- Character Images:

- High-quality images with defined facial features.

- Should match the framing and angle of the driving performance.

Step 5: Finalizing the Video with Final Cut Pro

With the animated clips ready, I turned to Final Cut Pro to assemble the video. Initially, I considered using a voice changer from ElevenLabs for the voices, but I found that applying audio effects to my own recordings yielded a more authentic result. Here’s what I did:

- Voice Modification: Used Final Cut Pro’s audio effects to adjust the pitch and tone, making my voice sound younger and older where needed.

- Background Music: To add emotional depth, I created an instrumental track using Suno. The warm, nostalgic music complemented the dialogue perfectly.

- Editing: I synchronized the audio with the video clips, ensuring smooth transitions between the younger and older selves.

Considerations for Optimal Results

Throughout the process, I learned some valuable lessons:

- Input Quality Matters: The better the quality of your videos and images, the more impressive the output.

- Follow Best Practices: Adhering to Runway’s guidelines significantly reduces errors and enhances the final product.

- Experimentation is Key: Don’t be afraid to tweak and adjust. Small changes can lead to big improvements.

- Landscape Over Portrait: Using landscape-oriented videos can prevent movement-related errors during processing.

Final Thoughts: Empowering Creativity with AI

This project was not just about testing a new tool; it was a journey into the future of content creation. Runway’s Act-One opens up possibilities that were once the realm of science fiction. It empowers individuals to create engaging, personalized content without the need for extensive resources or technical expertise.

However, as with any technological advancement, it’s essential to consider the broader implications:

- Accessibility vs. Professionalism: While tools like Act-One democratize content creation, they also challenge traditional methods and industries, such as acting and animation.

- Ethical Considerations: Responsible use is crucial. Respect for intellectual property and adherence to ethical standards must guide our creative endeavors.

- Future of Storytelling: AI-driven tools can enhance storytelling, allowing for more diverse and innovative narratives that connect with audiences on a deeper level.

In the end, the experience of “meeting” my younger and older selves was both fun and profoundly reflective. It reminded me of the timelessness of human curiosity and the ever-evolving nature of technology. I encourage you to explore these tools and see where your creativity takes you.